On August 20, 2024, we announced the general availability of the new AWS CloudHSM instance type hsm2m.medium (hsm2). This new type comes with additional features compared to the previous AWS CloudHSM instance type, hsm1.medium (hsm1), such as support for Federal Information Processing Standard (FIPS) 140-3 Level 3, the ability to run clusters in non-FIPS mode, increased storage capacity of 16,666 total keys, and support for mutual transport layer security (mTLS) between the client and CloudHSM.

The hsm1 instance type is reaching end-of-life and will be unavailable for service on December 1, 2025. See the hsm1 deprecation notification.

To address this, starting April 2025, AWS will attempt to automatically migrate existing hsm1 clusters to hsm2. During the migration, the hsm1 cluster will operate in limited-write mode.

If you want to use automatic migration and can accommodate restrictions on operations during the migration, make sure that your environment meets the prerequisites for automatic migration.

If you want to manage the migration yourself, you can do so before the automatic migration begins. In this post, we provide a few options for migration so you can choose the method that’s best for your situation and available resources.

To help facilitate high availability during migration, you can use a blue/green deployment strategy. If high availability isn’t a priority, there are two approaches: one where write operations are restricted and a second where you incur some downtime on operations. We also cover different use cases based on the operations performed during migration and provide rollback strategies.

Important considerations

When planning a migration to hsm2, consider the following:

Backup: We recommend keeping a backup of hsm1 until you have confirmed that all the required keys have been migrated to hsm2. You can configure a CloudHSM backup retention policy to manage backups.

Note: CloudHSM doesn’t delete a cluster’s last backup. See Configuring AWS CloudHSM backup retention policy for more information. You can also share the CloudHSM backups with other AWS accounts as described in Working with shared backups.

Availability and rollback: This post presents two main migration approaches. One that preserves availability but might become complex depending on the type of keys used and operations performed during the migration period. The other approach is less complicated but might impact availability for a short time. Choose the migration process based on your availability requirements.

Blue/Green strategy: You can use a blue/green deployment strategy using an enterprise-specific method or a CloudHSM multi-cluster configuration.

Note: Multi-cluster configuration is supported for CloudHSM CLI, JCE, and PKCS11.

Client SDK version: Instance type hsm2 is compatible only with Client SDK version 5.9.0 and later. Upgrade your client SDK before starting migration. We recommend using the latest version.

Deprecated algorithms: Make sure you’re not using any deprecated algorithms. You won’t be able to migrate to an hsm2 cluster using backup if you’re using any deprecated algorithms. If you’re using 3DES, you can continue to use it in hsm2 non-FIPS clusters only. See How to migrate 3DES keys from a FIPS to a non-FIPS AWS CloudHSM cluster.

Known issues: See the known issues with hsm2 to amend your tests and metrics as needed after migration.

Limited availability

There are two options: customer triggered and customer managed. Choose the approach that best fits your requirements. Note that for both options, you need to satisfy the migration criteria. See Prerequisites for migrating to hsm2m.medium.

Customer triggered

You can trigger migration of your hsm1 cluster from the AWS Management Console for CloudHSM or the AWS Command Line Interface (AWS CLI), and AWS will manage the migration process. Follow the detailed steps in Migrating from hsm1.medium to hsm2m.medium. This approach is suitable if you don’t perform frequent write operations such as creating or deleting users or keys. During the migration, the hsm1 cluster enters limited-write mode where write operations will be rejected until migration is complete. Write operations performed by your application, if any, will fail during the migration. Read operations remain unaffected. If a rollback is required, it will be managed by AWS. If necessary, you can roll back the migration within 24 hours of starting it. The customer triggered migration process is straightforward because no configuration changes are required. If your application requires write operations during migration you can follow the customer managed option.

Customer managed

This approach is suitable if you can schedule a brief downtime to perform migration. For this process, you create a new hsm2 cluster using the latest hsm1 backup. After you add the same number of HSMs to the hsm2 cluster as are in the hsm1 cluster, stop the application, reconfigure the CloudHSM client library to hsm2, and restart the application.

Create an hsm2 cluster from backup: CloudHSM makes periodic backups of your cluster at least once every 24 hours. If you need a more recent backup, follow the steps in Cluster backups in AWS CloudHSM to trigger a backup. If you created a backup retention policy when you created the cluster, that will determine how long the backups are retained before being purged. The default is 90 days. After you have identified the backup, create an hsm2 cluster from the CloudHSM console or AWS CLI. For the console, choose HSM type hsm2m.medium and Cluster source as Restore cluster from existing backup and choose the designated backup of hsm1.

Update cluster for high availability: The new hsm2 cluster will have only one HSM instance. You can now add the same number of instances as hsm1 to this cluster. See adding an HSM to CloudHSM cluster. Based on your workload, add more HSMs to the cluster to ensure high availability. This is a good time to review the cluster to be sure that it follows best practices.

Reconfigure client SDKs: During the maintenance window, stop your application that is integrated with the CloudHSM client SDK, reconfigure the appropriate client SDK to talk to the new hsm2 cluster, and then restart the application. See Bootstrap the Client SDK to reconfigure the SDKs. An alternative to stopping and reconfiguring existing applications is to launch a new application instance with the CloudHSM client configured to talk to hsm2 and decommission the old application instance.

Monitor the application: Monitor your application’s health metrics and logs to verify that operations run against the new hsm2 cluster are successful. If you see increased errors, you can roll back to the hsm1 cluster and contact AWS Support for assistance.

Rollback: You can roll back by reconfiguring your application to communicate with the hsm1 cluster, similar to how you configured your application to talk to the hsm2 cluster.

Delete the hsm1 cluster: After you’re satisfied with your new hsm2 cluster, you can delete the hsm1 cluster to reduce costs. This action will create a backup that will be retained—CloudHSM doesn’t delete a cluster’s last backup.

High availability

If you need your CloudHSM cluster to be highly available during migration, AWS recommends that you follow the blue/green deployment methodology. The fundamental idea behind blue/green deployment is to shift traffic between two identical environments that are running different versions of a service or application. The blue environment represents the current version serving production traffic—the hsm1 cluster. The green environment is staged in parallel, running a different version of the service—an hsm2 cluster. After the green environment is ready and tested, production traffic is redirected from blue to green. If problems are identified, you can roll back by reverting traffic back to the blue environment.

We discuss two blue/green approaches in this post. Approach 1 uses a load balancer to route traffic between the blue and green configurations. Approach 2 uses CloudHSM multi-cluster configuration and requires application code changes. Each has pros and cons in terms of effort and cost.

If you have already implemented a multi-cluster configuration in your application, you can follow Approach 2; otherwise, we recommend Approach 1.

A few important things to keep in mind when you implement either of these approaches.

You need to create the hsm2 cluster from the hsm1 backup as described in Customer managed.

If you need to support write operations during migration, you will need to run additional processes to make sure the data is in sync between the blue and green clusters. See Use cases to learn about different scenarios and plan accordingly.

Approach 1

For this approach, you create two separate but identical client environments. One environment (blue) runs the current application and the client SDK that connects to the hsm1 cluster. The other environment (green) runs the same application with the client SDK configured to talk to the hsm2 cluster. You then use a load balancer—such as Application Load Balancer (ALB)—to selectively route traffic between blue and green using the weighted target groups routing feature of ALB or an equivalent feature in your load balancer.

You can start by directing a small percentage of your application traffic to green. When you’re confident that green is performing well and is stable, shift traffic to green and shut down blue.

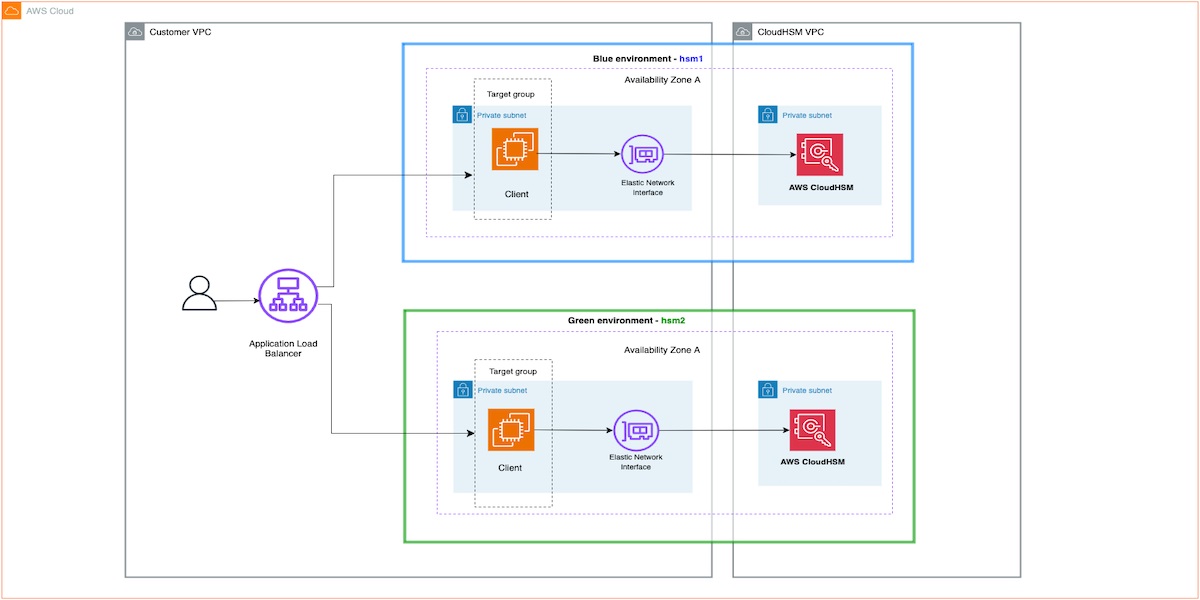

Figure 1: Blue/green migration architecture

The following are the steps of the migration architecture shown in Figure 1:

Create an hsm2 cluster from an hsm1 backup as described in Customer managed. Make sure you create the new cluster in the same Availability Zones as the existing CloudHSM cluster. This will be your green environment.

Spin up new application instances in the green environment and configure them to connect to the new hsm2 cluster.

Add the new client instances to a new target group for the ALB.

Next, use the weighted target groups routing feature of ALB to route traffic to the newly configured environment.

Each target group weight is a value from 0 to 999. Requests that match a listener rule with weighted target groups are distributed to these target groups based on their weights.

For more information, see Fine-tuning blue/green deployments on application load balancer.

You can follow the canary deployment pattern to roll out an hsm2 cluster integrated application to a subset of users before making it widely available while the hsm1 integrated application serves most of the users. To start, you can configure blue target group with a weight of 90 and green with 10; the ALB will route 90 percent of the traffic to the blue target group and 10 percent to green.

Monitor applications to verify that operations to green are successful (see Monitoring). After you’re satisfied with the response from green, you can update the weights to 0 and 100 for blue and green to completely switch over to green and then shut down blue.

For alternate approaches, such as DNS weighted distribution, see Blue/Green Deployments on AWS

Approach 2

This approach uses a single application environment that talks to both the hsm1 and hsm2 clusters. To shift traffic between blue and green environments, you will use the CloudHSM multi-cluster configuration, which allows a single client SDK to communicate with two or more CloudHSM clusters. Your application code needs to be modified to communicate with both blue and green clusters. In this post, we use a JCE SDK multi-cluster configuration, shown in Figure 2 that follows.

Figure 2: Multi-cluster migration architecture

The solution uses the basic blue/green deployment steps using a multi-cluster configuration and is designed for common use cases based on the type of CloudHSM operations performed during migration. We also cover how keys can be synchronized between the blue and green clusters and how to roll back.

Create an hsm2 cluster from an hsm1 backup

As described in Customer managed, create an hsm2 cluster from an hsm1 backup. Make sure you create the new cluster in the same Availability Zones as the existing CloudHSM cluster. This will be your green environment.

Modify the application to talk to both blue and green

In this step, you modify the application to use multi-cluster configuration to talk to both blue and green. When using a multi-cluster configuration, you need to configure the CloudHSM provider in the code instead of using the default config file.

In the application code, instantiate two providers: providerHsm1 pointing to blue cluster and providerHsm2 pointing to green cluster. Then update the business logic to switch traffic between blue and green using these providers.

Instantiate providers as shown in the following example. See Connecting to multiple clusters with CloudHSM CLI for a detailed explanation. Replace the following:

: File path to hsm1 trust anchor certificate that you used to initialize the cluster.

: The unique cluster ID of the hsm1 cluster.

: The unique cluster ID of the hsm2 cluster.

CloudHsmProviderConfig hsm1Config = CloudHsmProviderConfig.builder()

.withCluster(

CloudHsmCluster.builder()

.withHsmCAFilePath()

.withClusterUniqueIdentifier(“”)

.withServer(CloudHsmServer.builder().withHostIP(hsm1HostName).build())

.build())

.build();

CloudHsmProvider providerHsm1 = new CloudHsmProvider(hsm1Config);

if (Security.getProvider(provider1.getName()) == null) {.

Security.addProvider(provider1);

}

CloudHsmProviderConfig hsm2Config = CloudHsmProviderConfig.builder()

.withCluster(

CloudHsmCluster.builder()

.withHsmCAFilePath()

.withClusterUniqueIdentifier(“”)

.withServer(CloudHsmServer.builder().withHostIP(hsm2HostName).build())

.build())

.build();

CloudHsmProvider providerHsm2 = new CloudHsmProvider(hsm2Config);

if (Security.getProvider(provider2.getName()) == null) {

Security.addProvider(provider2);

}

Direct operations to blue and green using the respective providers.

Cipher cipher1 = Cipher.getInstance(“AES/GCM/NoPadding”, providerHsm1);

Cipher cipher2 = Cipher.getInstance(“AES/GCM/NoPadding”, providerHsm2);

Switch to green and shut down blue

Monitor the application to verify that operations on green are successful. See the Monitoring section. Once you are satisfied with response from green, you can update the application code to completely switch over to green.

Monitoring

During migration to hsm2, it’s important to monitor your application to confirm it’s working as expected and roll back if you notice increased errors. You can use your application logs and the CloudHSM client SDK logs to monitor the application.

Note: There are some known issues with hsm2 that will be fixed in future releases. See Known issues for AWS CloudHSM hsm2m.medium instances for a list of current known issues and their resolution status.

Use cases

Depending on the type of operations you perform on your CloudHSM cluster during migration, you need to run additional processes to make sure the data is in sync between the blue and green clusters. This will help avoid the split-brain scenario where blue and green clusters are in an inconsistent state if a write operation is performed during migration.

Read-only operations

During migration, if you only need to perform read operations—meaning you aren’t creating token keys—then the data between the clusters will be consistent. You can switch over to green completely following the blue/green-deployment methodology in Approach 1 or Approach 2.

Create/delete operations

If token keys need to be created during migration, the blue and green clusters need to be synchronized to make sure that read operations to the clusters are successful.

Write to blue: Initially, create operations can be directed to blue and read operations to both blue and green. In this case, the newly created keys need to be replicated to green. You can use the CloudHSM CLI key replicate command to synchronize keys. See Replicate keys.

Write to green: After you gain confidence in the read capability of the green cluster, you could begin swapping over the application to do write operations against the green cluster. In this case, if you’re still reading from both blue and green, you can replicate keys to blue using the CloudHSM CLI key replicate. See Replicate keys.

Replicate keys

Keys can be replicated between CloudHSM clusters that are created from the same backup using CloudHSM CLI with multi-cluster configuration.

Step 1: Configure multi-cluster:

Add blue and green clusters to the multi-cluster configuration. See Connecting to multiple clusters with CloudHSM CLI.

Step 2: Replicate keys from source to destination

Make sure that key owners and users that the key is shared with exist in the destination. Also, the crypto user or admin performing the operation needs to sign in to both clusters.

Run the key replicate command to replicate the keys from blue to green or vice versa as shown in the following example.

List keys in hsm1:

crypto_user@cluster- > key list –cluster-id cluster-

List keys in hsm2:

crypto-user@cluster- > key list –cluster-id cluster-

Replicate keys:

crypto_user@cluster- > key replicate

–filter attr.label=example-aes-2

–source-cluster-id cluster-

–destination-cluster-id cluster-

Rollback

The complexity of a rollback will depend on the stage of the migration and what keys were created. Normally, whether it’s during the migration or after, if you aren’t using hsm2-specific features such as new key attributes, then the rollback is straightforward. During the migration, if a rollback is needed, you can point your application back toward the hsm1 cluster. Through this approach, reads and writes will revert to happening on just the hsm1 and the rollback will be complete. If you created keys in only hsm2, you can replicate them back to hsm1.

The other scenario for a rollback is if you cannot replicate keys back to the hsm1 cluster. This can happen if you have fully migrated your application to hsm2 and have created more than 3,300 keys (the limit for hsm1) or are using hsm2-specific features. In this scenario, you need to make application changes to return to a multi-cluster setup where reads are performed against both hsm1 and hsm2 clusters (in case the keys exist in only hsm2), but write operations happen solely on the hsm1. In this case, the recommendation is to continue talking to both clusters and keep them in sync until non-replicable keys are no longer needed and the cluster can be scaled back down.

Conclusion

In this post, we described strategies to migrate a hsm1.medium CloudHSM cluster to hsm2m.medium. We explored commonly used blue/green deployments and AWS CloudHSM provided options. We also explored common use cases, steps to avoid common pitfalls, and rollback options.

If you have feedback about this post, submit comments in the Comments section below. If you have questions about this post, contact AWS Support.

Roshith Alankandy

Roshith is a Security Consultant at AWS, based in Australia. He helps customers accelerate their cloud adoption journey with security, risk, and compliance guidance and specializes in cryptography. When not working, he enjoys spending time with his family and playing football.